Revolutionizing everyday products with artificial intelligence

“Who is Bram Stoker?” Those three words demonstrated the amazing potential of artificial intelligence. It was the answer to a final question in a particularly memorable 2011 episode of Jeopardy!. The three competitors were former champions Brad Rutter and Ken Jennings, and Watson, a super computer developed by IBM. By answering the final question correctly, Watson became the first computer to beat a human on the famous quiz show.

“In a way, Watson winning Jeopardy! seemed unfair to people,” says Jeehwan Kim, the Class ‘47 Career Development Professor and a faculty member of the MIT departments of Mechanical Engineering and Materials Science and Engineering. “At the time, Watson was connected to a super computer the size of a room while the human brain is just a few pounds. But the ability to replicate a human brain’s ability to learn is incredibly difficult.”

Kim specializes in machine learning, which relies on algorithms to teach computers how to learn like a human brain. “Machine learning is cognitive computing,” he explains. “Your computer recognizes things without you telling the computer what it’s looking at.”

Machine learning is one example of artificial intelligence in practice. While the phrase “machine learning” often conjures up science fiction typified in shows like "Westworld" or "Battlestar Galactica," smart systems and devices are already pervasive in the fabric of our daily lives. Computers and phones use face recognition to unlock. Systems sense and adjust the temperature in our homes. Devices answer questions or play our favorite music on demand. Nearly every major car company has entered the race to develop a safe self-driving car.

For any of these products to work, the software and hardware both have to work in perfect synchrony. Cameras, tactile sensors, radar, and light detection all need to function properly to feed information back to computers. Algorithms need to be designed so these machines can process these sensory data and make decisions based on the highest probability of success.

Kim and the much of the faculty at MIT’s Department of Mechanical Engineering are creating new software that connects with hardware to create intelligent devices. Rather than building the sentient robots romanticized in popular culture, these researchers are working on projects that improve everyday life and make humans safer, more efficient, and better informed.

Making portable devices smarter

Jeehwan Kim holds up sheet of paper. If he and his team are successful, one day the power of a super computer like IBM’s Watson will be shrunk down to the size of one sheet of paper. “We are trying to build an actual physical neural network on a letter paper size,” explains Kim.

To date, most neural networks have been software-based and made using the conventional method known as the Von Neumann computing method. Kim however has been using neuromorphic computing methods.

“Neuromorphic computer means portable AI,” says Kim. “So, you build artificial neurons and synapses on a small-scale wafer.” The result is a so-called ‘brain-on-a-chip.’

Rather than compute information from binary signaling, Kim’s neural network processes information like an analog device. Signals act like artificial neurons and move across thousands of arrays to particular cross points, which function like synapses. With thousands of arrays connected, vast amounts of information could be processed at once. For the first time, a portable piece of equipment could mimic the processing power of the brain.

“The key with this method is you really need to control the artificial synapses well. When you’re talking about thousands of cross points, this poses challenges,” says Kim.

According to Kim, the design and materials that have been used to make these artificial synapses thus far have been less than ideal. The amorphous materials used in neuromorphic chips make it incredibly difficult to control the ions once voltage is applied.

In a Nature Materials study published earlier this year, Kim found that when his team made a chip out of silicon germanium they were able to control the current flowing out of the synapse and reduce variability to 1 percent. With control over how the synapses react to stimuli, it was time to put their chip to the test.

“We envision that if we build up the actual neural network with material we can actually do handwriting recognition,” says Kim. In a computer simulation of their new artificial neural network design, they provided thousands of handwriting samples. Their neural network was able to accurately recognize 95 percent of the samples.

“If you have a camera and an algorithm for the handwriting data set connected to our neural network, you can achieve handwriting recognition,” explains Kim.

While building the physical neural network for handwriting recognition is the next step for Kim’s team, the potential of this new technology goes beyond handwriting recognition. “Shrinking the power of a super computer down to a portable size could revolutionize the products we use,” says Kim. “The potential is limitless – we can integrate this technology in our phones, computers, and robots to make them substantially smarter.”

Making homes smarter

While Kim is working on making our portable products more intelligent, Professor Sanjay Sarma and Research Scientist Josh Siegel hope to integrate smart devices within the biggest product we own: our homes.

One evening, Sarma was in his home when one of his circuit breakers kept going off. This circuit breaker — known as an arc-fault circuit interrupter (AFCI) — was designed to shut off power when an electric arc is detected to prevent fires. While AFCIs are great at preventing fires, in Sarma’s case there didn’t seem to be an issue. “There was no discernible reason for it to keep going off,” recalls Sarma. “It was incredibly distracting.”

AFCIs are notorious for such ‘nuisance trips,’ which disconnect safe objects unnecessarily. Sarma, who also serves as MIT's vice president for open learning, turned his frustration into opportunity. If he could embed the AFCI with smart technologies and connect it to the ‘internet of things,’ he could teach the circuit breaker to learn when a product is safe or when a product actually poses a fire risk.

“Think of it like a virus scanner,” explains Siegel. “Virus scanners are connected to a system that updates them with new virus definitions over time.” If Sarma and Siegel could embed similar technology into AFCIs, the circuit breakers could detect exactly what product is being plugged in and learn new object definitions over time.

If, for example, a new vacuum cleaner is plugged into the circuit breaker and the power shuts off without reason, the smart AFCI can learn that it’s safe and add it to a list of known safe objects. The AFCI learns these definitions with the aid of a neural network. But, unlike Jeewhan Kim’s physical neural network, this network is software-based.

The neural network is built by gathering thousands of data points during simulations of arcing. Algorithms are then written to help the network assess its environment, recognize patterns, and make decisions based on the probability of achieving the desired outcome. With the help of a $35 microcomputer and a sound card, the team can cheaply integrate this technology into circuit breakers.

As the smart AFCI learns about the devices it encounters, it can simultaneously distribute its knowledge and definitions to every other home using the internet of things.

“Internet of things could just as well be called 'intelligence of things,” says Sarma. “Smart, local technologies with the aid of the cloud can make our environments adaptive and the user experience seamless.”

Circuit breakers are just one of many ways neural networks can be used to make homes smarter. This kind of technology can control the temperature of your house, detect when there’s an anomaly such as an intrusion or burst pipe, and run diagnostics to see when things are in need of repair.

“We’re developing software for monitoring mechanical systems that’s self-learned,” explains Siegel. “You don’t teach these devices all the rules, you teach them how to learn the rules.”

Making manufacturing and design smarter

Artificial intelligence can not only help improve how users interact with products, devices, and environments. It can also improve the efficiency with which objects are made by optimizing the manufacturing and design process.

“Growth in automation along with complementary technologies including 3-D printing, AI, and machine learning compels us to, in the long run, rethink how we design factories and supply chains,” says Associate Professor A. John Hart.

Hart, who has done extensive research in 3-D printing, sees AI as a way to improve quality assurance in manufacturing. 3-D printers incorporating high-performance sensors, that are capable of analyzing data on the fly, will help accelerate the adoption of 3-D printing for mass production.

“Having 3-D printers that learn how to create parts with fewer defects and inspect parts as they make them will be a really big deal — especially when the products you’re making have critical properties such as medical devices or parts for aircraft engines,” Hart explains.

The very process of designing the structure of these parts can also benefit from intelligent software. Associate Professor Maria Yang has been looking at how designers can use automation tools to design more efficiently. “We call it hybrid intelligence for design,” says Yang. “The goal is to enable effective collaboration between intelligent tools and human designers.”

In a recent study, Yang and graduate student Edward Burnell tested a design tool with varying levels of automation. Participants used the software to pick nodes for a 2-D truss of either a stop sign or a bridge. The tool would then automatically come up with optimized solutions based on intelligent algorithms for where to connect nodes and the width of each part.

“We’re trying to design smart algorithms that fit with the ways designers already think,” says Burnell.

Making robots smarter

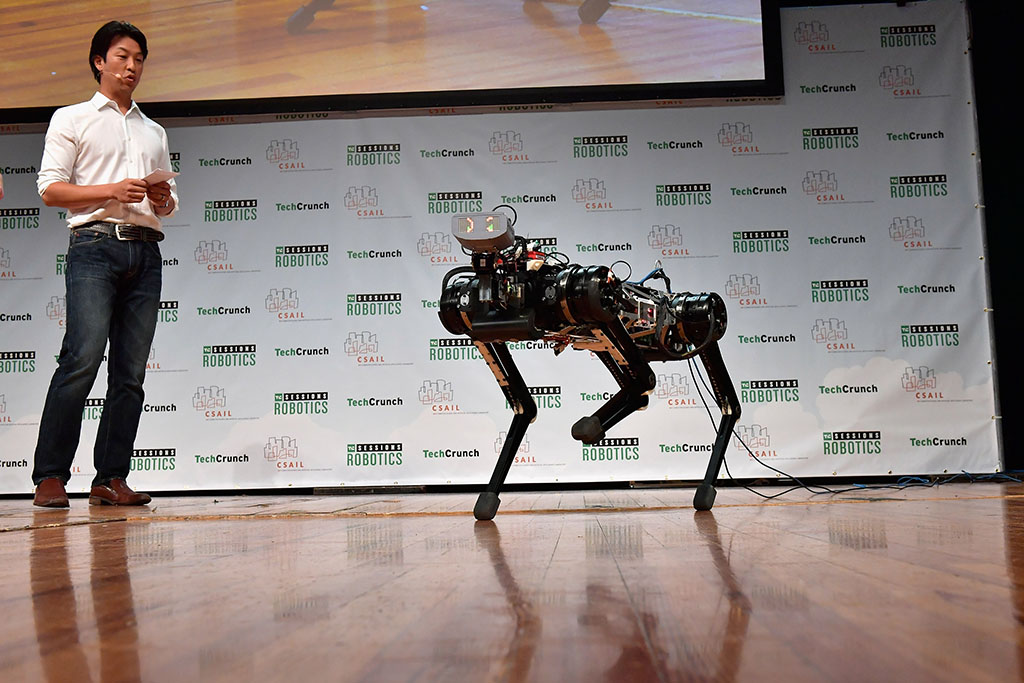

If there is anything on MIT’s campus that most closely resembles the futuristic robots of science fiction, it would be Professor Sangbae Kim’s robotic cheetah. The four-legged creature senses its surrounding environment using LIDAR technologies and moves in response to this information. Much like its namesake, it can run and leap over obstacles.

Kim’s primary focus is on navigation. “We are building a very unique system specially designed for dynamic movement of the robot,” explains Kim. “I believe it is going to reshape the interactive robots in the world. You can think of all kinds of applications — medical, health care, factories.”

Kim sees opportunity to eventually connect his research with the physical neural network his colleague Jeewhan Kim is working on. “If you want the cheetah to recognize people, voice, or gestures, you need a lot of learning and processing,” he says. “Jeewhan’s neural network hardware could possibly enable that someday.”

Combining the power of a portable neural network with a robot capable of skillfully navigating its surroundings could open up a new world of possibilities for human and AI interaction. This is just one example of how researchers in mechanical engineering can one-day collaborate to bring AI research to next level.

While we may be decades away from interacting with intelligent robots, artificial intelligence and machine learning has already found its way into our routines. Whether it’s using face and handwriting recognition to protect our information, tapping into the internet of things to keep our homes safe, or helping engineers build and design more efficiently, the benefits of AI technologies are pervasive.

The science fiction fantasy of a world overtaken by robots is far from the truth. “There’s this romantic notion that everything is going to be automatic,” adds Maria Yang. “But I think the reality is you’re going to have tools that will work with people and help make their daily life a bit easier.”